Porposal - As per SOW Definitions

4.1. TASK1 - Draft design plan

ProxmoxVE - Hyperconverged Infrastructure explanation

Today it is necessary to have a flexible virtualization environment which allows the growth of resources and in turn can provide greater availability of virtual services that run on it.

Most virtualization solutions have adopted the term Hyperconvergence to frame the different contributions of flexible and tolerant hypervisors connected to each other.

ProxmoxVE is no exception. Later in this guide we will explain the benefits that the hyperconverged solution will bring to the PNC in Guatemala as the base for containing a stable Contact Center Solution (ViciDial).

Proxmox as Hypervisor

Proxmox will provide the same services as a commercial Virtualization solution such as VMWare or Citrix but at a half of the price and without the need for licensing, which can be a problem due to lack of budget for government organizations such as PNC.

Main functions of Proxmox on PNC

- Create any VM based on any Operating System (open or commercial)

- Virtual machine backups

- Virtual Machine Snapshots

- Virtual Machine Migrations

- Hyperconverged cluster with shared storage

- Option for Replication of virtual machines.

Licensing: as previously described: there is NO licensing needed to use Proxmox as Hypervisor. PVE is an Open-source platform licensed by GNU Affero GPL. More information:

https://www.gnu.org/licenses/agpl-3.0.html

Updates and Patches: all updates, patches and new release versions of PVE will not require an Enterprise Subscription. New releases and versions should be tested prior to be implemented. VoxDatacomm will deliver this tests on a separate environment.

There is no need for a registration procedure.

How this Cluster will Work?

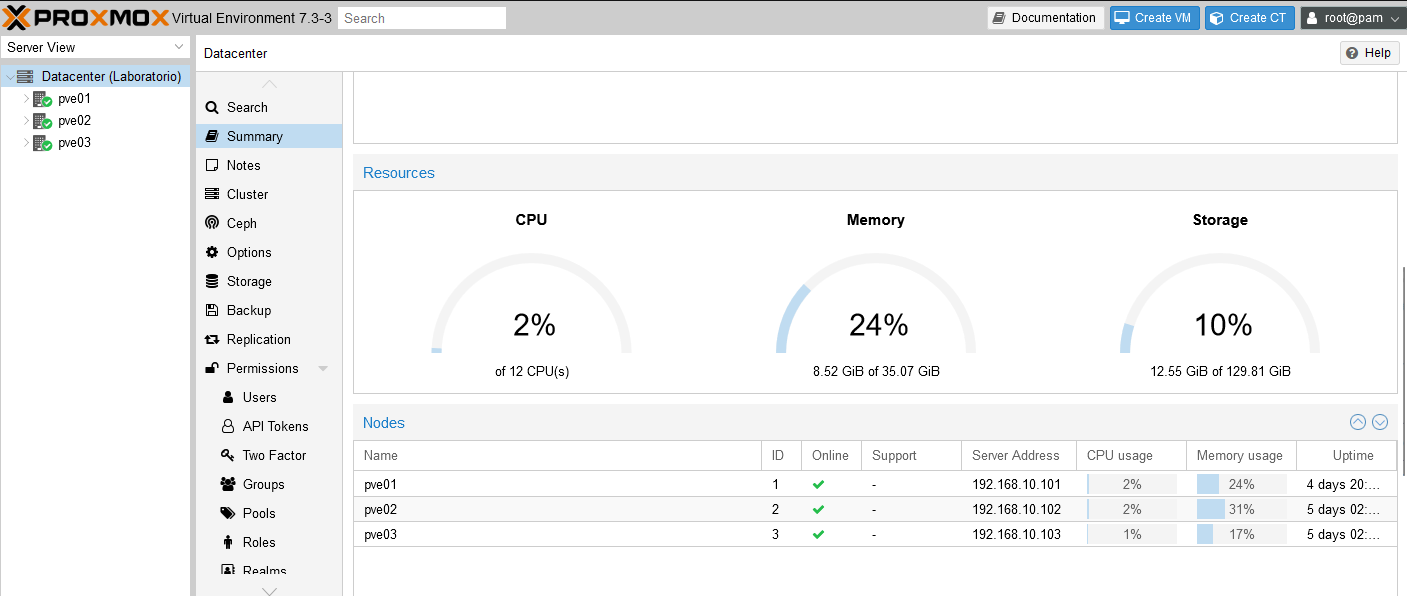

It's planned to make two Clusters, one at each site (primary and secondary sites). Each Cluster is designed to provide greater availability of virtual services in the event of a node or physical server failing, this is done through the native "Corosync" service in Linux. In case of a complete failure in the primary site, the secondary site Cluster can be used because it will be fully operable.

Site A <<<---------------------->>> Site B

<<<---------------------->>> Site B

The minimum number of nodes/servers for a Failover Cluster is three and the failure of one entire node is allowed. However, nodes can be added as required and to provide greater availability or fault tolerance.

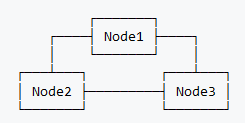

Cluster monitoring and storage replication will be made through a high performance full mesh connection between physical servers:

Network traffic will be managed through a standard separate network adapters. No storage traffic will be mixed.

How the Storage will Work?

ProxmoxVE supports different standard storage methods such as NFS, CIFS, iSCSI and one of the most important and recommended for this implementation is CEPH distributed storage.

What is CEPH?

CEPH unifies your compute and storage systems; physical servers will act as nodes within a cluster for both computing (processing VMs and containers) and replicated storage. The traditional silos of compute and storage resources can be wrapped up into a single hyper-converged virtual storage appliance, there is no hardware failure for controller cards of a SAN or witches to interconect the SAN Networks; With the integration of Ceph, an open source software-defined storage platform, Proxmox VE has the ability to run and manage CEPH storage directly on the hypervisor nodes.

Benefits of using CEPH for this Scenario:

-

Easy setup and management via GUI or vía CLI

-

Thin provisioning of storage disks (vms)

-

Snapshot support for each VM

-

Self healing cluster

-

Scalable to exabyte levels, just adding disks or nodes

-

Setup pools with different performance and redundancy characteristics

-

Data is replicated, making it fault tolerant

-

Can runs on commodity hardware for future expansion

-

No need for hardware RAID controllers (expensive hardware)

-

Open source, NO licensing, NO renovations out of budget

Requirements for growth:

-

No specific hardward is required, can be a mixed hardware in specs but it is recommended to be three identical servers as proposed.

-

Network communication between nodes (in a full mesh setup) at 10GbE fo a high performance tier.

-

Solid Stat Disks for optimum performance and replication of data between nodes.

-

Extra network for LAN traffic, can be 1GbE or 10GbE.

Concept Diagram:

Nodes will run as individual hardware based servers, but converged through "Corosync" as a High Availability Cluster.

Storage will run as individual hardware based disks on servers, but converged through "CEPH" as a Virtual Storage Area Network (vSAN).

Server Conectivity - Network traffic (LAN):

Local area network traffic will be handled by a Top of Rack switch, connected directly to a FortiGate Core Firewall through SFP+ running at 10Gb Fiber uplink.

Server Conectivity for CEPH (Storage & HCI):

To achieve High Availability Cluster with vSAN named as Hiperconverged Infrastructure a Full Mesh 10GbE dedicated network will act as a Storage Area Network.

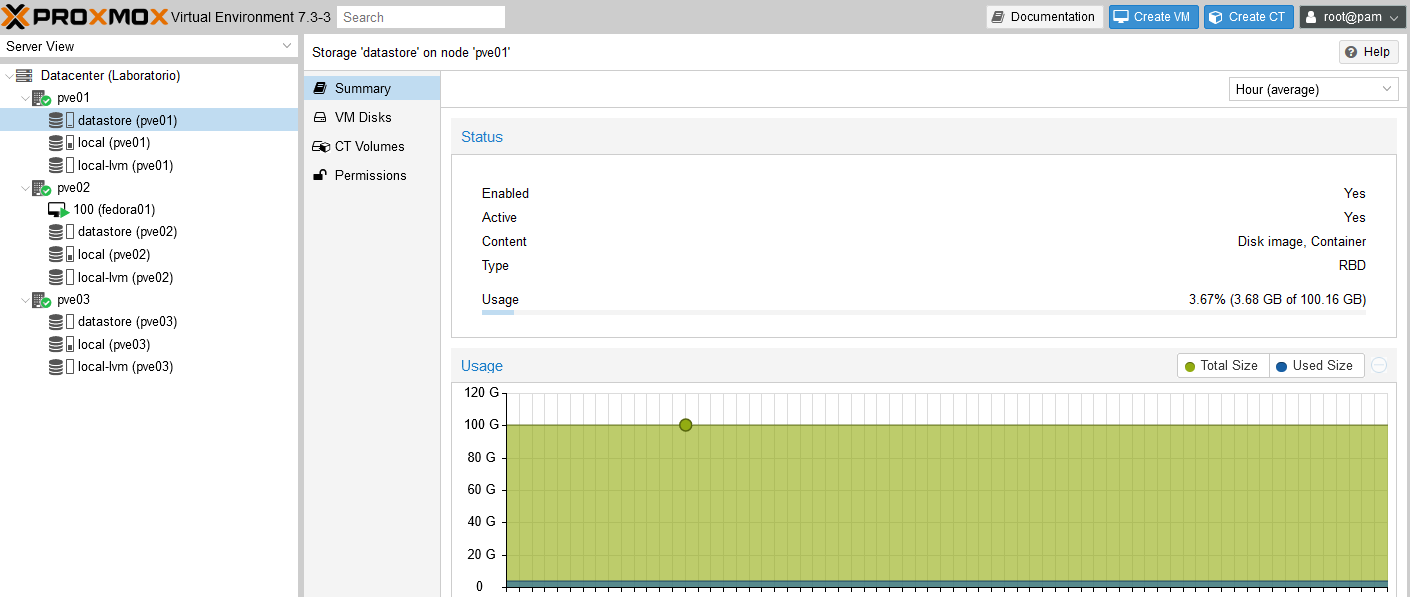

Storage Visibility:

As mentioned above, storage of each server will present as an individual disk/storage but will be converged into a single one Hyperconverged storage. This will allow to replicate data between nodes and each node can handle other servers VMs in a case of Hardware failures.

Hyperconverged storage will be presented to each node as a shared storage:

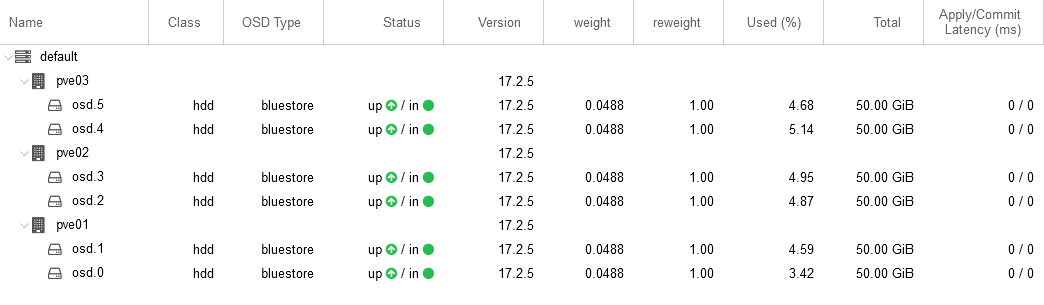

All nodes collaborating in the shared storage and within the clúster can be monitored in real-time for performance, latency and availability of each disk/space.

Management UI

The management for the cluster will be through a Web Interface compatible with the most common Internet Explorers like Chrome or Mozilla. An administrator just neet to manage the IP of one node and in this mode can manage the other nodes on a cluster.

Common tasks:

There are common tasks used in most Hypervisors, with proxmox on this Scenario an administrator can make the following tasks:

- Manage each node and his Hardware

- Manage network parameters of each node, including the Storage/Cluster network

- Manage multiple VMs and take actions on each one like: Snapshots, Backups, reconfiguration of virtual hardware or assign more network cards, vCPUs, memory or storage

- Acces the Video console for each VM running, allowing to do some maintenance tasks on the VM console directly

- Upload ISO images to a dedicated repository (temporarly) to allow new OS/VM installation

- Other VM related tasks

Backups

In this project there are three main data retentions that needs to be handled correctly:

- Complete VM backup of each service

- The call-recordings of each call behind the Contact Center Solution (audiostore)

- Audiostore archiving

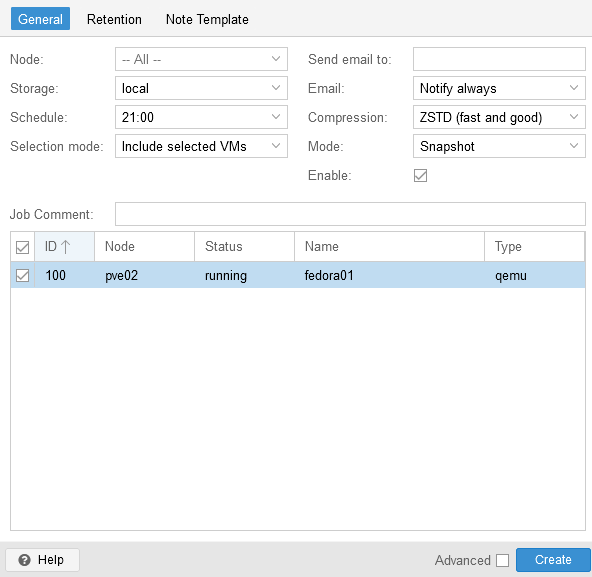

Backup of VM's:

The GUI includes a special section to manage VMs backups; those backups will go directly to a shared volume on external NAS; allowing to make restore of every backup VM on any active cluster node or future nodes added.

Backup can be scheduled at a pre-defined time/date and have its own retention policy or just allowing all backups.

Each site will have it's own backup appliance and jobs.

Call Recordings:

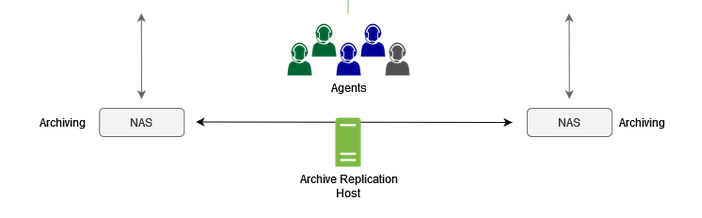

The call recordings will be stored initially on the storage volume of the Contact Center solution (ViciDial) servers but in time will be migrated automatically to external volumes so the backups will run in-time as scheduled and not lagged by any space issues. This feature is called as: Archiving.

The call recordings are moved to external volume and all the call records will point to the new location.

The call recordings archiving will replicate to the secondary site for backup purposes through a dedicated service called: "Archive Replication Host".

Site A Site B

Physical installation of hardware

Rack Installation

It is required a standard 4 post rack or enclosure to install all the proposed hardware. PNC have at least 4 racks available on site. The distribution is projected to be:

- 30U rack consumption per site

- Front installation only

Electrical Requirements: an electrical new circuit is needed for each site; this circuit will be provided by the PNC for each site. Specifications are: 208V independent electrical circuit. The outlets of each UPS will include a Step-Down Transformer from 208V to 110V. Outlets for all equipment will be NEMA 5-15 R through APC PDU directly attached to UPS and vertically installed in rack.

Environmental Requirements: all the rack hardware should have a operation temperature from 23o to 25o celcius. It's recommended to have humidity extractors as regular maintenance of the datacenter.

Electrical Connectors of each appliance: Dell PowerEdge Servers, Dell PowerVault NAS, Dell Switches and KVM will have this connector: (NEMA5-15P/R)

Network connectors:

Standard LAN ports (Servers, switches, KVM, NAS, etc): RJ45

Standard uplink Switch ports, SFP+ 10Gb: LC Connector

Standard E1/PRI Telephony port: RJ45

ViciDial - Contact Center solution explanation

Based on the actual operations of the Contact Center of PNC and focusing on improvements for a long term time; the replacement solution will be managed by ViciDial OpenSource Contact Center software.

The scenario will be a distributed and clustered services to allow stable and scalable services through the time.

Design

ViciDial support the most common services running on a single instance or VM for scenarios running with a maximum of 30 agents or simultaneous calls.

Our recommendations for the PNC site will be with Distributed Services; this means each service will have it's own dedicated VM/OS and the benefits using this scenario are:

- Load distribution between nodes, avoiding performance degradation caused by individual services on single server.

- Scalability to allow capacity growth in future

- Fault tolerance if a specific node fails

- Maintenance tasks without service interruption

Main services:

- VoIP Proxy that will manage equal distribution calls to the frontend servers

- Frontend servers that will manage agents login and linking between callers vía web interface

- HA Proxy that will manage equal distribution of agents logins vía web interface.

- Database master will handle all operations of the complete solution

- Database slave will hande all the reporting operations isolating the production enviornment from long query's or reports

- PBX server will handle manual calls or transferences between agents and staff outside the call center (administrative area).

Licensing: as previously described: there is NO licensing needed to use ViciDial as Clustered telephony platform. ViciDial is an Open-source platform licensed by GNU GPL. More information:

http://www.vicidial.com/gpl.html

Updates and Patches: all updates, patches and new release versions (SVN Versions) of ViciDial will not require an Enterprise Subscription or license. New releases and versions should be tested prior to be implemented. VoxDatacomm will deliver this tests on a separate environment.

There is no need for a registration procedure.

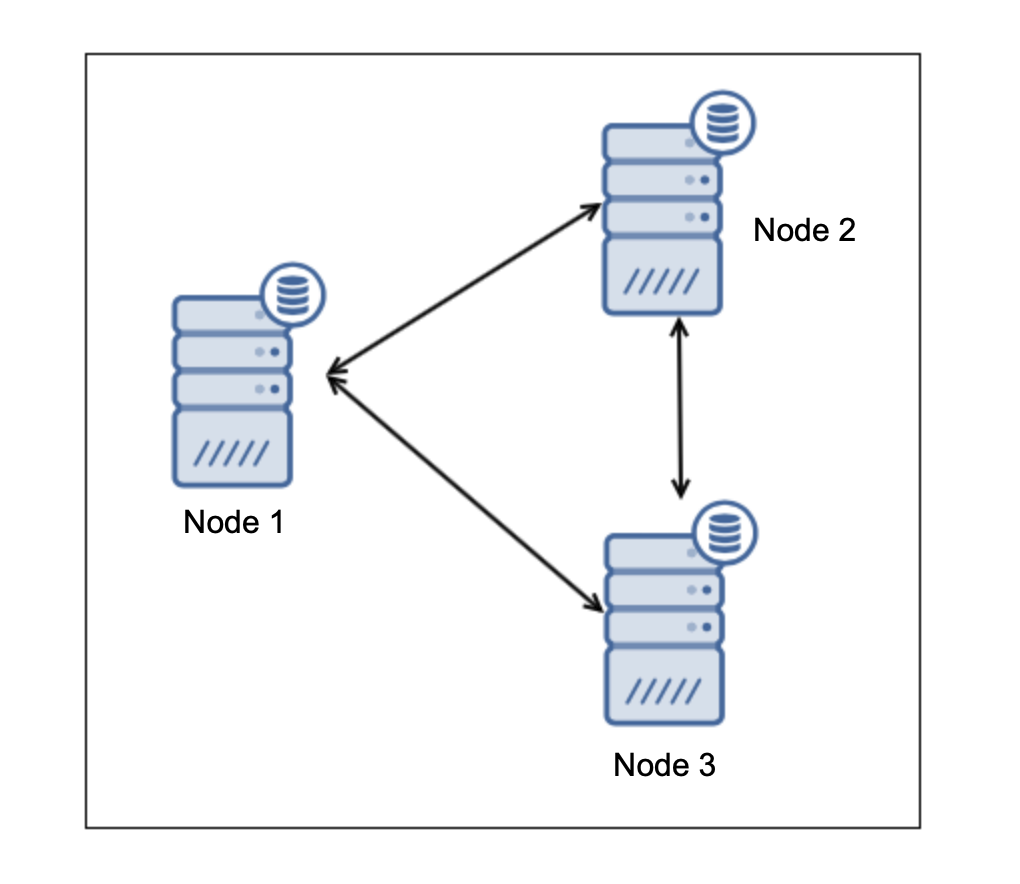

Concept Diagram:

How agents will work? Agents will use any standard Internet Explorer like Mozilla or Chrome and open a URL on theri computers or workstations. This URL will redirect the request to the HA Proxy servers (for load balancing purposes) and the agent will be able to login to the assigned Frontend server with user and password.

After successful login the agent will have it's "Agent Console" with integrated "Webphone" using the WebRTC protocol and the hardware of the workstation (headset usb) to send/receive calls.

Each agent can switch from active to non available states; those inactive agents will turn into "Paused agents". Different pauses can be managed and edited from the supervisor console.

How supervisor will work? Supervisors have the same privileges of an agent and can send/receive calls but they are by default paused by the system as they will do tasks related to supervision and monitoring of the contact center operations.

Callflow

Call flow will be handled by the VoIP proxy to load balance between different servers, there are two main scenarios for this type of implementation of ViciDial.

Load balance of a Call: this scenario occurs when a call is balanced between the two telephony servers, leading to equal concurrent calls for each server and each agent is balanced between the two front-end telephony servers, leading to equal concurrent calls for each server.

Load balance of an Agent: this scenario occurs when a call is sent to the same telephony server, leading to all concurrent calls in one single server and each agent is balanced between the two front-end telephony servers, leading to equal concurrent calls for each server.

Failover

Failover to site B occurs when there are no telephony or frontend servers available on site A. This is monitored by a keep-alive daemon running on each site. Thise scenario works as an active-active solution.

Call/Event Recording

Every call will be recorded as an event; that event can contain different information or records depending on the type of call.

Call event: each call will generate a registry in the main database allowing to search any call by different fields. Some common fields are: Caller ID Number, Date, Agent that receive the call, call duration, Queue of the call and other fields.

Call recording: Each call will be recorded; the recording will start since the agent take the call if the call was in awaiting state; it means that calls in awaiting state will not count for recording. Recording will be in MP3 format and the file will be stored on any telephony node and the SQL registry will point to that location to be readable from any place/node.

After some time (customizable) all audios will go to "Archiving" process and will allow to store big data on other volumes or directories (NAS, NFS, etc) an then create a refference in SQL registry and will be reachable from any node as archive search.

4.2. TASK2 - Hardware and Software

Details of solution, hardware an software are based exactly as requirements in SOW plus our recommendations for better performance, space or flexibility.

4.3. TASK3 - Installation and Execution

4.3.1-2 - Implementation

Implementation will be according to main phases listed above:

Site Survey

Site surven is mandatory to know specific infrastructure details and network configurations for the new platform. Can be resumed as:

- Coordination of hostnames to be used for cross platform

- Recopilation of networking parameters for each host/service

- Coordination of DNS records requiered and pre-testing tasks

- Recopilation of call-flow information, campaigns, agents, music on hold, time parameters, pauses and statuses needed for the contact center operations.

- All general information related to platform including conectivity on datacenter and endpoints.

- Coordination of electrical conectivity, power supply and physical equipment requirements.

- Coordination of Development areas for Integration with RAP software.

- Coordination of Avaya management interface to allow SIP Trunk or E1 PRI Trunk for integration of both solutions.

Mandatory Requirements (pre-requisites)

- Customer must fill-in all required information included on the ViciDial Site Survey document (Sample provided here Site Survey - ViciDial.xlsx)

- Customer must provide at least 20 private IP addresses for each site.

- Customer must provide 2 FQDNs for the following services: SIP, HTTPs proxy.

With all previous tasks we can deploy accordingly.

Deployment of Primary Site

- Installation and deployment of all phisical hardware and equipment.

- Installation and deployment of all Debian operating systems for hypervisors

- Configuration of networks to be used on equipments, servers and hypervisors.

- Clusterization of Debian operating system nodes, configuration of CEPH based virtual SAN.

- Installation and deployment of all OpenSuse operating systems for ViciDial Clusterization.

- Configuration of networks to be used on virtual machines.

- Update of all operating systems repos, apps and dependencies.

- Configuration of Database servers, replications and local Slave DB server for reporting services.

- Configuration of E1 Gateways to be used for PSTN connection.

- Assessment for SIP or E1 PRI Tunk with ViciDial

- Installation and configuration of Kamailio SIP Proxies.

- Installation and configuration of HA Proxies.

- Installation and configuration of Keep Alive Daemon.

- Configuration of ViciDial: agents, campaigns, in-groups and SIP trunks.

- Configuration of NAS repository for Hypervisor backups.

- Configuration of NAS repository for ViciDial Audiostore and mapping to OS's

- Configuration of Replication host for Audiostore replication to secondary site.

- Documentation of all configurations and settings.

Deployment of Secondary Site

- Installation and deployment of all phisical hardware and equipment.

- Installation and deployment of all Debian operating systems for hypervisors

- Configuration of networks to be used on equipments, servers and hypervisors.

- Clusterization of Debian operating system nodes, configuration of CEPH based virtual SAN.

- Installation and deployment of all OpenSuse operating systems for ViciDial Clusterization.

- Configuration of networks to be used on virtual machines.

- Update of all operating systems repos, apps and dependencys.

- Configuration of Database servers, replications and local Slave DB server for reporting services.

- Configuration of E1 Gateways to be used for PSTN connection.

- Installation and configuration of Kamailio SIP Proxies.

- Installation and configuration of HA Proxies.

- Installation and configuration of Keep Alive Daemon.

- Configuration of ViciDial: agents, campaigns, in-groups and SIP trunks.

- Configuration of NAS repository for Hypervisor backups.

- Configuration of NAS repository for ViciDial Audiostore and mapping to OS's

- Configuration of Replication host for Audiostore replication to secondary site.

- Documentation of all configurations and settings.

Schedule

After confirmation of all hardware availability on customer's site, and the pre-sales site survey to understand all the required operations for the contact center; the implementation will be scheduled as:

| Week #1 | Week #2 | Week #3 | Week #4 | Week #5 |

|

| Site survey networking | Previously | ||||

| Site survey and coordination tasks for development and integration with RAP | Previously | ||||

| Installation of physical hardware | X | ||||

| Implementation of cluster operating systems | X | ||||

| Configuration of networking | X | ||||

| Implementation of distributed storage | X | ||||

| Implementation fo VM's for telephony platform | X | ||||

| Implementation of ViciDial services | X | ||||

| Implementation of Gateways and SIP trunks | X | ||||

| Implementation of Load balancing and proxies | X | ||||

| Testing & quality control | X | ||||

| Configuration of ViciDial campagins, agents and general configurations | X | ||||

| Configuration of NAS repositories | X | ||||

| Secondary Site installation and replication | X | ||||

| Integration with RAP software | X | X | |||

| General tests and documentation | X | ||||

| Training and non-production tests | X | ||||

| Migration of PSTN to ViciDial | X |

Maintenance windows

All implementation tasks will run without service disruption of actual telephony solution (Avaya). There are several tasks that will stop/interrupt services to the new proposed telephony solution only, this tasks are resumed:

- New UPS Startup and tests

- New Servers cluster failover tests

- Migration of new VMs behind Proxmox

- Isolated call tests for the new platform

- Multiple VM testing on PVE, Backup and replication.

Migration of PSTN to ViciDial

The migration of PSTN to ViciDial can be executed in a convenient time proposed by the PNC. VoxDatacomm will arrange all necessary tests and certification prior to the migration.

Estimated times:

- Call tests for Avaya <> Vicidial integration = 1 hour

- PSTN to ViciDial migration = 1 hour

4.4. TASK 4 - Training and administration of system

Methods

Training will be executed with the customer as:

- On-site training

- Eight training sessions

- 100 Call Taker Agents, in 4 groups of 25,

- 10 Supervisors, in 2 groups of 5,

- 10 Administrators, in 2 groups of 5.

During training VoxDatacomm will provide hard copies of O&M manuals in Spanish to the end-users and admin staff, as well as an electronic version of the manuals.

Addressed to

- Agents: any single agent that will login to the console and make/receive calls on this platform

- Supervisor: any single agent that can have supervisor role to make the contact center more efficient, and in need for a reporting console and supervision tools.

- Administrators: any staff that can manage all IT related software or hardware and be responsable of customer infrastructure.

Scheduling

Trainings can be scheduled as per agenda of the customer, it's recommended to:

- Train agents per groups

- Train agents with their supervisors at the same time

- Train supervisors for reporting purposes

- Train administrators in management areas

Training topics

For Agents

- Login portal and fields

- Operation of main agent console

- Hold, transfer or conference calls

- Manual dial

- Statuses and pause

- Webphone main recomendations

For Supervisor

- Same as agents training with addition of

- Access to Report section with most used reports fon an agent

- How to search calls based on leads or custom fields

- Interpretation of the Dashboard

For Technical staff

Training will include all related components of the proposed solution.

Servers:

- Basic operation of physical servers and components.

- Explanation of connections including: power, network and clusterization.

- Remote administration of each node of Proxmox and the entire Cluster.

- Creation of VM's on Proxmox Cluster.

- Backup tasks, jobs and log verification

- High availability tasks

- Migration of a VM between local physical nodes

- Restoration of a VM from backup from local backup repository

Telephony Platform:

- Basic operation of each virtual machine containing services on the cluster (hypervisor)

- Explanation of each service and their function on the entire platform

- How to do maintenance tasks if needed on demand (unplanned)

- How to access management console (web GUI)

- How to do maintenance of users

- How to verify the log for changes or audit changes

- General check of statuses and services on GUI

- How to search recordings based on search fields

General:

- General operation of rack components

- KVM operations

- Network Switch operation

- UPS systems

- Explanation of general project documentation

Exclusions

Once a training has been completed for specific person or group of persons; all additional training fees will be covered by the person or customer itself.

4.5. TASK 5 - Preventive and Corrective Maintenance Services

Support plan will include all preventive and corrective maintenance tasks and in addtion:

Physical maintenance

- Physical maintenance of each server of Proxmox HCI Clúster every six months with a non disruptive on site tasks

- Electrical check of power outlets on UPS, PDUs and related systems for the Telephony platform.

- Detailed report if any issues were encountered during the maintenance tasks.

Logical maintenance

Logical maintenance of the platform consists in periodic updates and patches of the OpenSource solutions involved as follows:

- Monthly FreePBX updates (including available updates to modules installed)

- Quarterly Proxmox updates (if available and tested stable in our laboratories)

- Quarterly ViciDial updates (if available and tested stable in our laboratories)

Maintenance window must be provided by the end customer and scheduled with at least 15 calendar days.

There are no downtimes on upgrading process since all floating IPs can be redirected to Site B for Agents to be able to connect from either of both sides. (Connectivity between sites MUST be available during this process)

Quality controls

- Verification of DNS servers for FQDN domain's

- Certificate validation for configured domain between different telephony platform nodes.

- Call tests and call-flow confirmation on telephony platform at primary site.

- Codec testing between different nodes, agents and trunks as codec standarization to avoid transcoding.

- Load balancing of different services between nodes on the primary site.

- Bandwidth tests for each call.

- Call tests and call-flow confirmation on telephony platform running at secondary site.

- Codec testing between different nodes, agents and trunks as codec standarization to avoid transcoding at secondary site.

- Load balancing of different services between nodes on the secondary site.

- Bandwidth tests for each call.

- Documentation of each quality test.

Safety Plan

Safety plan will verify the operation of different services containing High Availability features. All tests will be executed with no intervention to production enviornment to avoid interruption of services.

- VoIP balancing tests between VoIP proxy's.

- Agent login balancing tests between HA proxy's.

- Stability of the telephony platform.

- Snapshot test for maintenance tasks and consistency check.

- Backup job test of VM's for maintenance tasks and consistency checks.

- Restore of a backed up VM to non-production enviornment, consistency check.

- Database consistency check on Master and Slave nodes.

- Database replication between primary and secondary site.

- Audiostore replication between primary and secondary site.

- Documentation of each check and report if any issue is observed during processes.

Security Plan

Security plan will verify the operation with security enforcement's and best practices as possible on every maintenance task.

- Vulnerability check for common services and protocols: HTTPS, SIP, NFS, SSH and API's

- Update of Operating system repositories to mitigate issues or CVE's

- Update of ViciDial SVN versión to mitigate issues or CVE's

- Auditing of Proxmox Administrators

- Auditing of ViciDial Administrators

- Password policy enforcments

- Internal firewall policy enforcements for every single node on the solution

- Documentation of each check and report if any issue is observed during all processes.

Support Plan

All of the software to be implemented will have an active support contract of three years and the customer can receive remote or on-site support to allow better time responses.

Our SLA's are defined as follow:

| Priority | Response time: after opening a support ticket | Classification (explanation) |

| High |

from 0 to 3 hours for remote support from 0 to 6 hours for on-site support *includes non-business hours |

Requirements that affect business continuity; service disruption, major failure. |

| Medium |

according to availablility of support engineers with a high priority to avoid mayor faults or issues. *business hours |

Requirements that without prompt action can lead to a major failure in the associated services or systems. |

| Normal |

according to availability of support engineers *business hours |

Common requirements or configuration changes without affecting services. Include trainings or explanation of functions or questions. |

- Business hours: From Monday to Friday from 8 to 17 hours. No se incluyen días festivos o asuetos.

- Type of services: remote support or on-site support.

- Limitations: perimeter of the capital city. Interior of the capital or other countries are subject to evaluation and they will be notified by mail or telephone.

- Exceptions: Maintenance tasks, implementations or development of functions are NOT contemplated or categorized in Medium or High priority; Only tasks related to direct failures are contemplated.

Procedures for support:

Customer must designate a list of employees or staff that can handle support requests directly with our support team.

A previously authorized contact can open a case through:

| Telephone number | +502 2278-8181 option 9 (support) |

| soporte@voxdatacomm.com | |

| Whatsapp (business hours only) | https://wa.me/50222788181 |

If customer send a support request vía e-mail; a case will open automatically and if it is during non-business hours, the technician on duty will be assigned. Customer will receive e-mail updates regarding technician and other follow-ups.

Any case opened by Telephone or Whatsapp will have notifications and updates to the requester by e-mail.

Warranty

Warranty hardware is included in support plan; customer can open a case with our support center and all related tasks for warranty claims will be attended from our side with some coordination between manufacturer and support engineers for site related tasks.

Dell Servers: 3 year pro-support 24x7 with NBD on-site hardware replacement for Latin America

Dell Switches: 3 year basic hardware warranty repair with 5x10 hardware replacement NDB on-site services for Latin America

Dinstar Equipment: 3 year basic hardware warranty 24x7 with NBD on-site hardware replacement.

UPS: 3 year support with NBD on-site services.

Exceptions: end-user hardware like headsets, batteries, cables and cords.

6.0 Period of Performance

Support contract for the entire platform will be of three years total since deliverable products and solutions offered in this proposal.

a) Past performance projects similar in nature to this project:

VoxDatacomm have 12 years of experience in telephony platforms and contact center platforms and have some enterprise customers with a ditributed Telephony services running similar as described in "Draft Design". Some of the customers are:

- Banco GyT Continental de Guatemala

- Bodega Farmacéutica (Farmacias Cruz Verde / Meykos)

- Cuentame Guatemala (contact center and BPO)

b) Point of Contact (POC) to coordinate with INL:

For any implementation task, coordination or follow-up there will be a contact that will have this Project Assigned and will work as Project Manager from VoxDatacomm, S.A. It is required that PNC can desgnate the following point of contacts to have specific role designated tasks.

No Comments